Meridian, a 2016 Netflix release, is available for uncompressed download for engineers, production artists, or anyone else who wants to practice their media processing skills on studio production quality material. Many thanks are due to Netflix for releasing this title under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License

(CC) Netflix licensed under CC BY-NC-ND 4.0

The film is described as “cerebral, full of suspense, chilling, ominous.” I don’t know about the story, but those words certainly describe the process of obtaining and using this film’s downloadable media assets. It is released in multiple color renderings on two different sites, some versions in Interoperable Media Format (IMF) and other encodings wrapped in mp4 packages.

You know with a name like “Interoperable Media Format” that the IMF package is likely to present some barriers to actually access the elementary audio and video media. IMF adds a layer of packaging on top Material eXchange Format (MXF), which by itself is always a challenge to wrap and unwrap.

In this post we’ll enter the Meridian cave armed with only a flashlight and ffmpeg. When we emerge, we’ll have elementary stream files in hand and know what color rendering they belong to.

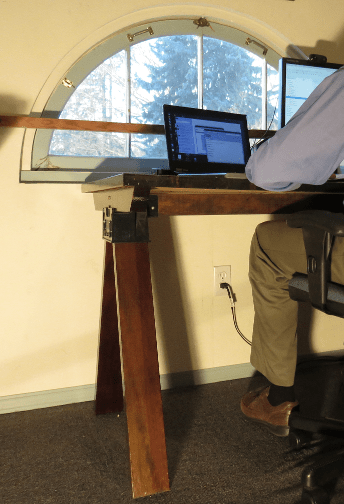

Some decades ago I arrived in Portland to relaunch an engineering career after a short, mistaken move to Westchester County, New York. One of my first actions getting set up in Oregon was to build my own desk. There was no money to buy one because I had just returned money for moving expenses to my previous employer.

Some decades ago I arrived in Portland to relaunch an engineering career after a short, mistaken move to Westchester County, New York. One of my first actions getting set up in Oregon was to build my own desk. There was no money to buy one because I had just returned money for moving expenses to my previous employer.